Retention Curves: Understanding Early User Behavior

Retention curves don't lie. They show how many users stick around over time and, more importantly, where they drop off. Reading these curves correctly tells you whether your onboarding creates lasting users or temporary visitors. According to 2025 B2B SaaS benchmarks from Pendo analyzing thousands of companies, the average Day 1 retention rate sits around 25-30%, while Day 30 retention drops to just 5-7%. Most apps lose nearly all their users within a month. Understanding these patterns and how your product stacks up lets you systematically improve onboarding.

This guide covers how to create, read, and act on retention curve analysis using 2026 benchmarks and proven diagnostic approaches.

Day 1 retention too low?

Create step-by-step guides that deliver value faster and keep users coming back with Glitter AI.

What Are Retention Curves?

Retention curves track the percentage of users who return to your product over time, starting from their first use.

Basic Structure

X-Axis: Time (days, weeks, or months since signup)

Y-Axis: Percentage of original cohort still active

Example:

Day 0: 100% (all users start here)

Day 1: 55%

Day 7: 32%

Day 14: 24%

Day 30: 18%

Types of Retention

N-Day Retention:

Were users active on exactly day N?

Bounded Retention:

Were users active during a period (Week 1, Month 1)?

Unbounded Retention:

Were users active on day N or any day after?

Retention vs. Churn

Retention Rate: % of users who return

Churn Rate: % of users who don't return

Relationship: Retention + Churn = 100%

Reading Retention Curves

The Shape Tells the Story

Healthy Curve:

Steep initial drop, then flattens—users who stay early tend to stay.

Problematic Curve:

Continues declining without flattening—no stable user base forming.

Best-Case Curve:

Minimal initial drop, quick flattening—strong product-market fit.

Key Inflection Points

Day 1:

Immediate value perception. Did first experience deliver?

Day 7:

Habit formation period. Did users establish routines?

Day 30:

Long-term potential. Will users become regulars?

Curve Patterns

Pattern: Cliff

Day 0: 100%

Day 1: 20%

Day 7: 15%

Problem: First experience fails to deliver value.

Pattern: Gradual Decay

Day 0: 100%

Day 1: 60%

Day 7: 40%

Day 30: 10%

Problem: No stable user base forming.

Pattern: Healthy Retention

Day 0: 100%

Day 1: 55%

Day 7: 35%

Day 30: 25%

Day 90: 22%

Healthy: Curve flattens, stable user base.

Benchmarks by Time Period

Day 1 Retention

What It Measures:

Day 1 retention tells you whether users got enough value in their first session to come back the next day. It's the earliest and most critical indicator of whether your onboarding works and whether you have product-market fit.

2025 Industry Benchmarks:

Day 1 retention varies a lot by industry and product type. Productivity apps see a meager 17.1%, while utilities aren't much better at 18.3%. Banking apps hit 30.3%, which makes sense given how critical financial tools are. Social media apps start at 26.3%. For B2B SaaS specifically, benchmarks run higher than consumer apps: below 30% is poor, 40-50% is average, 50-60% is good, and 60%+ is excellent.

Primary Influences:

First-time experience determines whether users encounter clarity or confusion, value or emptiness, success or frustration. Time to value matters a lot here. Apps that activate users within 3 minutes see nearly 2x higher retention rates according to UXCam research. Onboarding quality encompasses how well you guide users, reduce friction, handle empty states, and orchestrate moments that lead to the "aha" realization of product value.

Day 7 Retention

What It Measures:

Day 7 retention shows whether users have started forming habits and working your product into their routines. It represents the transition from initial curiosity to actual behavioral adoption.

2025 Industry Benchmarks:

Day 7 performance varies dramatically by sector. Productivity apps average just 7.2%, utilities fall to 6.8%. That's a lot of early drop-off. Banking apps maintain 17.6% by Day 7, showing better habit formation around financial tools. Social media apps drop to 9.3% by Day 7, with Statista data showing further decline to 3.9% by Day 30. For B2B SaaS, below 15% is poor, 20-25% is average, 25-35% is good, and 35%+ is excellent. Analysis of 83 B2B SaaS companies shows software keeps about 39% of users after one month on average, with roughly 30% still returning after three months.

Core Influences:

Core value delivery determines whether users experience enough benefit to justify continued use rather than writing off the product as a failed experiment. Feature engagement shows whether users discover and adopt capabilities beyond their initial use case, expanding utility and increasing switching costs. Use case fit measures alignment between what your product does and what users need. Products solving genuine pain points naturally retain better than those addressing peripheral problems or offering marginal improvements over alternatives.

Day 30 Retention

What It Measures:

Day 30 retention is the real test of whether users will become long-term customers or churn after initial exploration. This is typically where retention curves flatten into stable patterns.

2025 Industry Benchmarks:

The numbers at 30 days can be sobering. Average Day 30 retention across mobile apps sits at just 5-7%, meaning most apps lose nearly everyone within a month. B2B SaaS fares better: benchmark data from 83 companies shows average Month-1 retention at 46.9% with a median of 45.25%, though it varies wildly by vertical. Fintech and Insurance lead at 57.6% while Healthcare lags at 34.5%. For categorization purposes: below 8% is poor, 10-15% is average, 15-25% is good, and 25%+ is excellent. Top B2B SaaS companies achieve Net Revenue Retention over 120% and Gross Revenue Retention above 95%, with medians at 106% NRR and 90% GRR.

Strategic Influences:

Product-market fit becomes impossible to hide by Day 30. Products solving genuine problems for the right audiences retain users. Those with weak fit watch retention keep declining without flattening. Ongoing value delivery matters: do users keep discovering benefits, achieving outcomes, and feeling like their time or money investment is justified? Competition also affects retention as users compare your product against alternatives they've discovered during the first month. Superior options pull users away from merely adequate solutions.

Benchmark Caution

Industry benchmarks give helpful context, but you have to interpret them carefully. Massive variation exists across products and contexts. Product type fundamentally shapes what retention you should expect. Daily-use communication tools need different retention patterns than monthly analytics dashboards or quarterly reporting tools. Use frequency expectations dramatically affect what "good" looks like. Products designed for daily engagement should show much higher Day 7 retention than those built for weekly or occasional use. User segment matters too. Enterprise users behave differently than SMB customers, technical users differ from non-technical audiences, and paid users retain better than free users. Industry vertical creates context-specific norms, as the 2025 data shows: Fintech and Insurance achieve 57.6% Month-1 retention while Healthcare hits only 34.5%.

The most valuable comparison is longitudinal, tracking your own retention curves over time to see improvement from product changes, onboarding optimization, or feature launches. Think of retention as a three-act story: Day 1 is the hook, Day 7 tests habit formation, and Day 30 is the moment of truth for long-term viability. Compare to your own history first. Use external benchmarks for context, not as absolute targets.

Calculating Retention

Basic Formula

Day N Retention:

(Users active on day N / Total users from cohort) × 100

Example:

- Cohort: 1,000 users signed up Jan 1

- Active on Jan 8 (Day 7): 320 users

- Day 7 Retention: 320/1000 = 32%

Defining "Active"

Login:

Simplest but may not indicate real engagement.

Key Action:

More meaningful but requires defining key action.

Engagement Threshold:

Multiple actions, time spent, etc.

Recommendation:

Use meaningful activity, not just login.

Cohort Selection

Time-Based:

Users who signed up in same period.

Size-Based:

Ensure cohorts are large enough for reliability.

Segment-Based:

Separate cohorts by user type, source, etc.

Cohort Analysis

Why Cohorts Matter

Different cohorts may have different retention:

- Product changes over time

- Seasonal variations

- Marketing channel differences

- Market changes

Creating Cohort Tables

Structure:

Day 1 Day 7 Day 14 Day 30

Week 1 52% 30% 22% 16%

Week 2 55% 33% 25% 19%

Week 3 58% 35% 28% 21%

Week 4 54% 32% 24% 18%

Reading Cohort Tables

Horizontal (Row):

How one cohort retains over time.

Vertical (Column):

How retention at specific day changes across cohorts.

Diagonal:

Absolute performance at same calendar time.

Insights from Cohorts

Improving Retention:

Later cohorts retain better → changes are working.

Declining Retention:

Earlier cohorts retain better → investigate what changed.

Consistent Retention:

Similar across cohorts → product is stable.

Segmented Retention

Why Segment

Aggregate retention hides important differences.

Example:

- Overall Day 7: 32%

- Marketing users: 45%

- Developer users: 18%

Without segmentation, you'd miss that developers need different onboarding.

Key Segments

By Acquisition:

- Channel (organic, paid, referral)

- Campaign

- Source

By User Type:

- Role

- Use case

- Company size

By Behavior:

- Completed onboarding vs. skipped

- Feature usage patterns

- Engagement level

By Time:

- Day of week signup

- Season

- Before/after changes

Acting on Segments

High-Retention Segments:

- Understand what works

- Acquire more of these users

- Model onboarding for others

Low-Retention Segments:

- Investigate causes

- Customize onboarding

- Consider segment viability

Retention and Onboarding

Processes undocumented?

Create step-by-step SOPs that capture best practices in minutes with Glitter AI.

How Onboarding Affects Retention

Day 1 Retention:

Directly impacted by first-time experience.

Day 7 Retention:

Influenced by core value demonstration.

Day 30 Retention:

Shaped by habit formation and ongoing value.

Diagnosing Onboarding Issues

Low Day 1, OK Day 7+:

First experience problem. Users who survive improve.

OK Day 1, Low Day 7:

Initial hook but no sustained value.

Gradual Decline, No Flattening:

Never establishing sticky habits.

Onboarding Changes to Test

To Improve Day 1:

- Faster time to value

- Clearer first action

- Better empty states

- Reduced friction

To Improve Day 7:

- Feature discovery

- Use case reinforcement

- Re-engagement triggers

- Email sequences

To Improve Day 30:

- Habit formation features

- Expanded value delivery

- Social features

- Advanced feature introduction

Predictive Analysis

Early Indicators

What users do in the first few days has remarkably strong predictive power for long-term retention. This lets teams build scoring models and intervention triggers based on leading indicators rather than waiting for lagging results.

Validated Retention Correlations:

Specific early actions correlate with dramatically higher long-term retention. Users who complete structured onboarding show roughly 2x higher Day 30 retention compared to those who skip or abandon it, which validates investing in onboarding design. Core feature adoption on Day 1 correlates with about 3x better Day 30 retention. Users who experience the primary value proposition immediately develop stronger attachment than those who explore peripheral features first. Returning on Day 2 is one of the strongest signals, with these users showing approximately 4x better long-term retention. The Day 2 return indicates Day 1 delivered enough value to motivate immediate re-engagement.

2025 research shows that triggering core feature usage within the first 3 days can raise retention from 60% to 71%, demonstrated by SaaS tools using targeted feature activation nudges. The sooner retention curves flatten, the better. Product-market fit shows a distinct shape on these curves. It converges or levels off rather than continuing to decline indefinitely.

Building Predictive Models

Building good predictive retention models lets teams identify at-risk users in real-time and intervene before churn becomes inevitable. It transforms retention from reactive measurement to proactive management.

How to Build These Models:

Start by identifying all measurable behaviors users can exhibit in their first 3-7 days: feature usage, session frequency, collaboration actions, content creation, integration connections, help resource access. Correlate each behavior with Day 30 and Day 90 retention by analyzing historical cohorts and calculating retention rates for users who did each behavior versus those who didn't. Look for high-correlation behaviors where the retention rate difference exceeds meaningful thresholds (typically 20+ percentage points), prioritizing actions that both correlate strongly with retention and happen frequently enough to be actionable at scale. Then focus onboarding on driving those high-value behaviors through flows, prompts, emails, and interventions designed to increase completion rates of the actions that predict retention.

2025 research on onboarding optimization shows that better onboarding can improve first-year retention by 25%, while high feature adoption rates (70%+ usage) double the likelihood of long-term retention. Products that systematically activate key features early show significantly better retention than those allowing passive exploration without guidance toward value-creating actions.

Using Predictions

Real-Time Scoring:

Score users based on early behavior.

Intervention Triggers:

High-risk users get additional support.

Resource Allocation:

Focus human touch on salvageable users.

Visualizing Retention

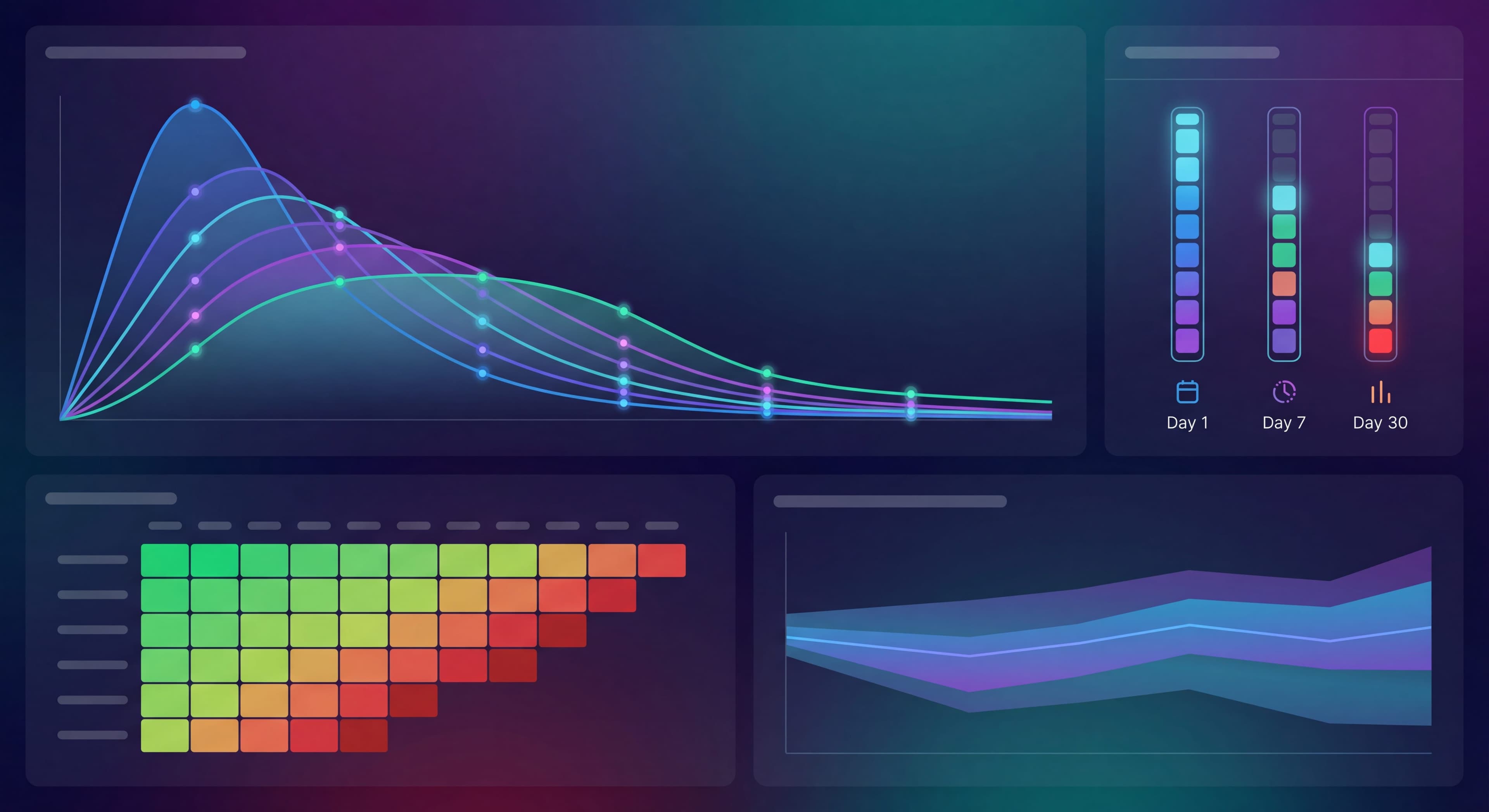

Retention Curve Chart

Line chart showing retention over time.

Best For:

- Single cohort analysis

- Comparing few cohorts

- Seeing curve shape

Cohort Heat Map

Color-coded table showing retention by cohort and time.

Best For:

- Comparing many cohorts

- Spotting trends

- Dense data visualization

Retention Band Chart

Area chart showing retention ranges.

Best For:

- Showing variance

- Confidence intervals

- Range visualization

Processes undocumented?

Create step-by-step SOPs that capture best practices in minutes with Glitter AI.

Common Retention Mistakes

Mistake 1: Wrong Time Period

Problem: Analyzing daily retention for weekly-use product.

Fix: Match retention period to natural use frequency.

Mistake 2: Too Short Observation

Problem: Drawing conclusions before curve stabilizes.

Fix: Wait for curve to flatten before concluding.

Mistake 3: Ignoring Cohort Effects

Problem: Comparing different time periods without accounting for changes.

Fix: Use cohort analysis, control for variables.

Mistake 4: Aggregate Only

Problem: Only looking at overall retention.

Fix: Segment by meaningful dimensions.

Mistake 5: Login as Activity

Problem: Counting login as "active."

Fix: Define activity as meaningful engagement.

Improving Retention

Systematic Approach

- Diagnose: Where is the biggest drop?

- Understand: Why are users leaving?

- Hypothesize: What would improve it?

- Test: Run experiments

- Measure: Track retention impact

- Iterate: Continue improving

Quick Wins for Retention

Day 1:

- Faster time to first value

- Clearer onboarding guidance

- Follow-up email same day

Day 7:

- Re-engagement email sequence

- In-app prompts for returning users

- Feature discovery nudges

Day 30:

- Expanded use case support

- Community features

- Advanced feature introduction

Long-Term Retention Strategy

Build Habits:

- Daily/weekly use triggers

- Notification strategies

- Workflow integration

Increase Value:

- Feature adoption

- Use case expansion

- Outcome delivery

Create Switching Costs:

- Data investment

- Team adoption

- Integration depth

Tools for Retention Analysis

Analytics Platforms

Amplitude:

Strong retention analysis, cohort explorer.

Mixpanel:

Retention reports, flow analysis.

Heap:

Auto-capture, retention analysis.

Specialized Tools

Pendo:

Product analytics with retention focus.

ChartMogul:

Revenue-focused retention.

Building Dashboards

Track:

- Rolling retention by period

- Cohort comparison

- Segment breakdown

- Trend indicators

Users churning before activation?

Build step-by-step SOPs that drive early engagement and flatten your retention curve with Glitter AI.

The Bottom Line

Retention curves are the ultimate scorecard for onboarding. They show not just whether users sign up, but whether they stay. Understanding these curves, their shape, their benchmarks, their segment variations, gives you diagnostic power to identify problems and validate solutions.

Key Principles:

- Curve shape matters as much as the numbers

- Segment to find insights that aggregate data hides

- Early behavior predicts long-term retention

- Use cohorts to track improvement over time

- Act on specific drop-off points

The goal isn't just measuring retention. It's understanding it deeply enough to improve it systematically.

Continue learning: Activation Rate and Funnel Analysis.

Frequently Asked Questions

What is a retention curve and how do you read it?

A retention curve tracks the percentage of users who return to your product over time from their first use. The x-axis shows time since signup while the y-axis shows percentage still active. A healthy curve shows a steep initial drop that then flattens, indicating users who survive early tend to stay. A problematic curve continues declining without flattening.

What are good benchmarks for day 1 and day 7 retention?

For day 1 retention, below 30% is poor, 40-50% is average, 50-60% is good, and above 60% is excellent. For day 7 retention, below 15% is poor, 20-25% is average, 25-35% is good, and above 35% is excellent. However, benchmarks vary significantly by product type, use frequency, and industry, so compare to your own history first.

Why is cohort retention analysis important?

Cohort analysis reveals differences in retention across user groups that aggregate data hides. Different cohorts may retain differently due to product changes, seasonal variations, marketing channel differences, or market changes. Reading cohort tables horizontally shows how one cohort retains over time while vertical reading shows how retention at a specific day changes across cohorts.

How do you use retention curves to diagnose onboarding issues?

Low day 1 retention with better day 7+ suggests a first experience problem where surviving users improve. Good day 1 but low day 7 indicates an initial hook without sustained value delivery. Gradual decline without flattening means users never establish sticky habits. Each pattern points to different onboarding improvements needed.

How does early user behavior predict long-term retention?

Early behaviors strongly correlate with long-term retention. Users who complete onboarding often show 2x higher day 30 retention. Those who use core features on day 1 may see 3x better retention. Returning on day 2 can indicate 4x better long-term retention. Use these correlations to build predictive models and trigger interventions for high-risk users.